Variants on recurrent nets

- Architectures

- How to train recurrent networks of different architectures

- Synchrony

- The target output is time-synchronous with the input

- The target output is order-synchronous, but not time synchronous

One to one

No recurrence in model

- Exactly as many outputs as inputs

- One to one correspondence between desired output and actual output

Common assumption

- is typically set to 1.0

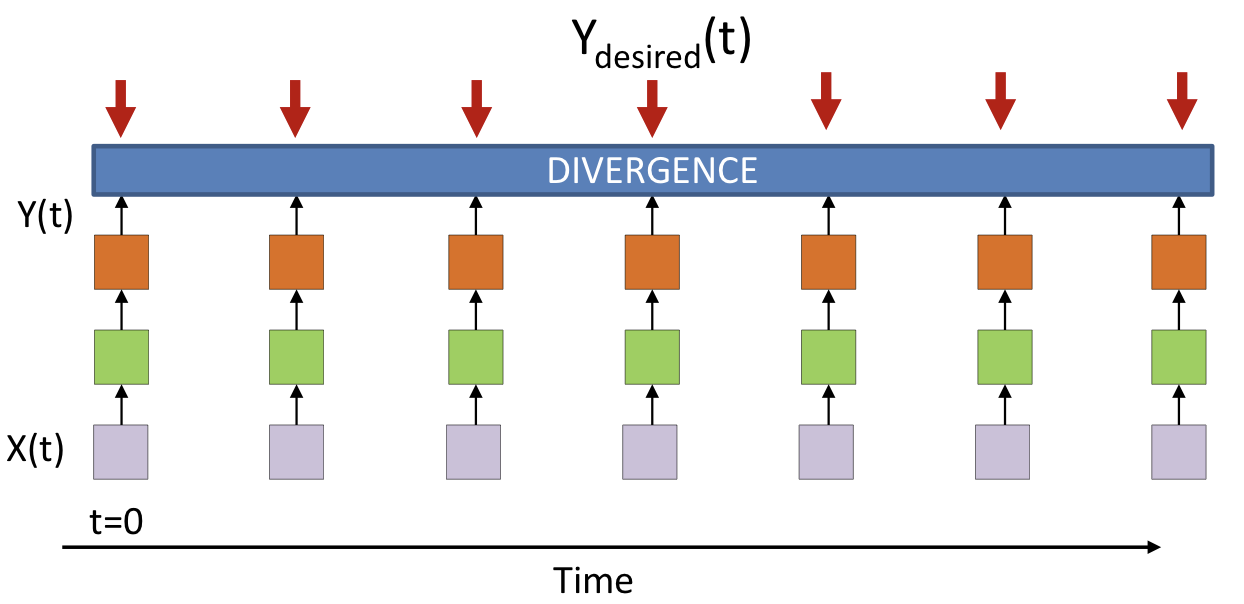

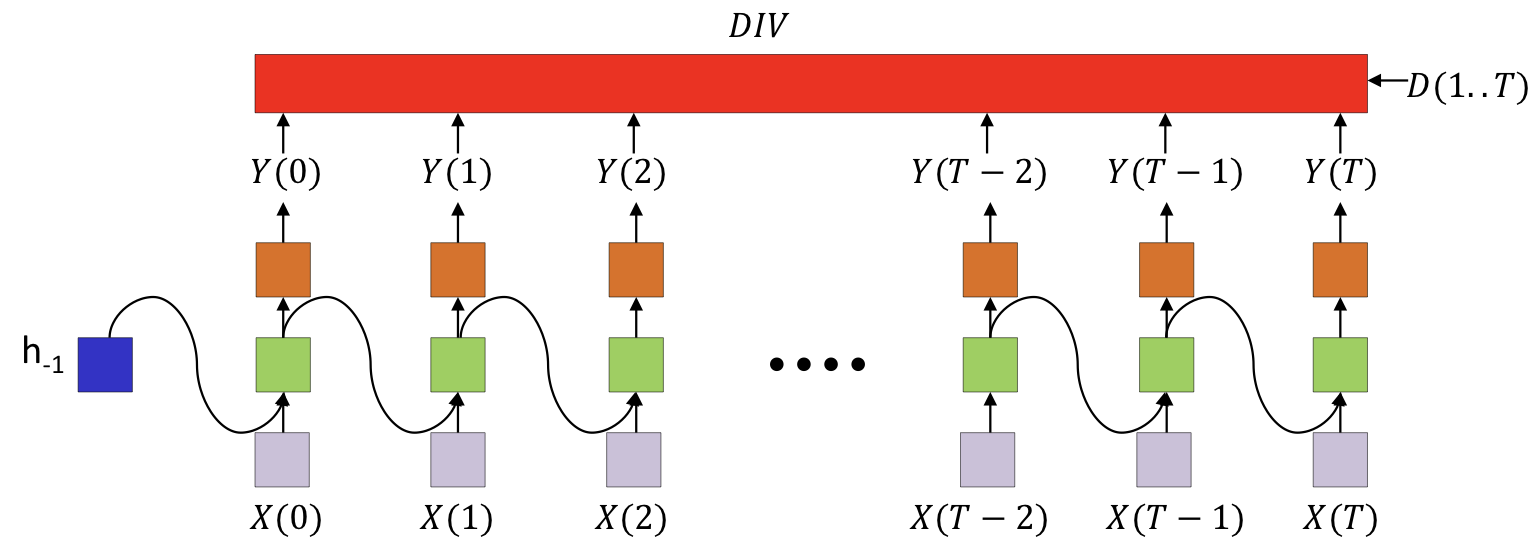

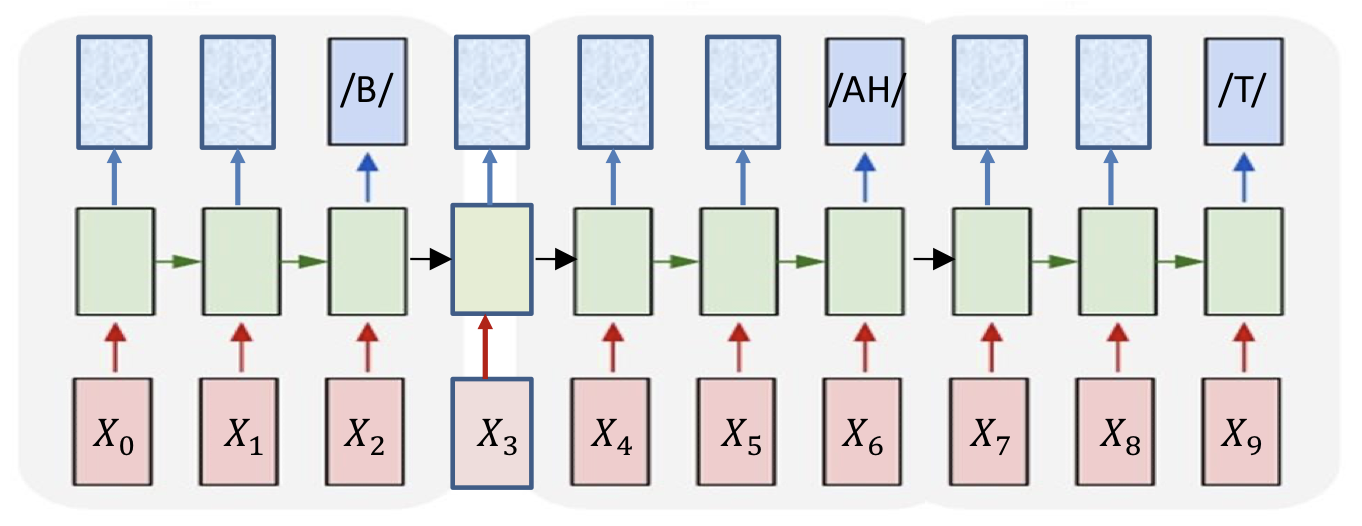

Many to many

- The divergence computed is between the sequence of outputs by the network and the desired sequence of outputs

- This is not just the sum of the divergences at individual times

Language modelling: Representing words

Represent words as one-hot vectors

- Sparse problem

- Makes no assumptions about the relative importance of words

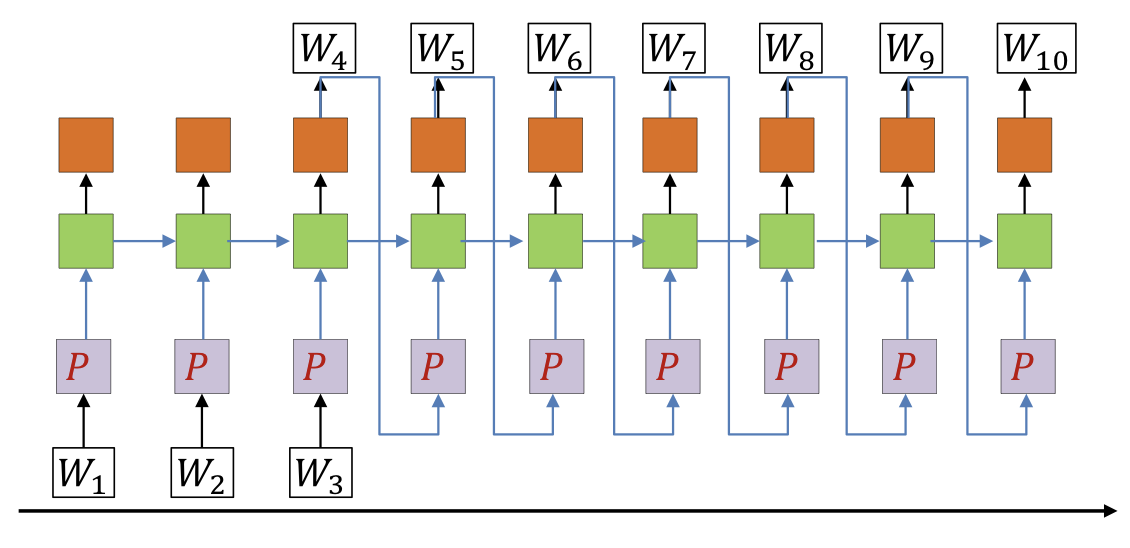

The Projected word vectors

- Replace every one-hot vector by

- is an matrix

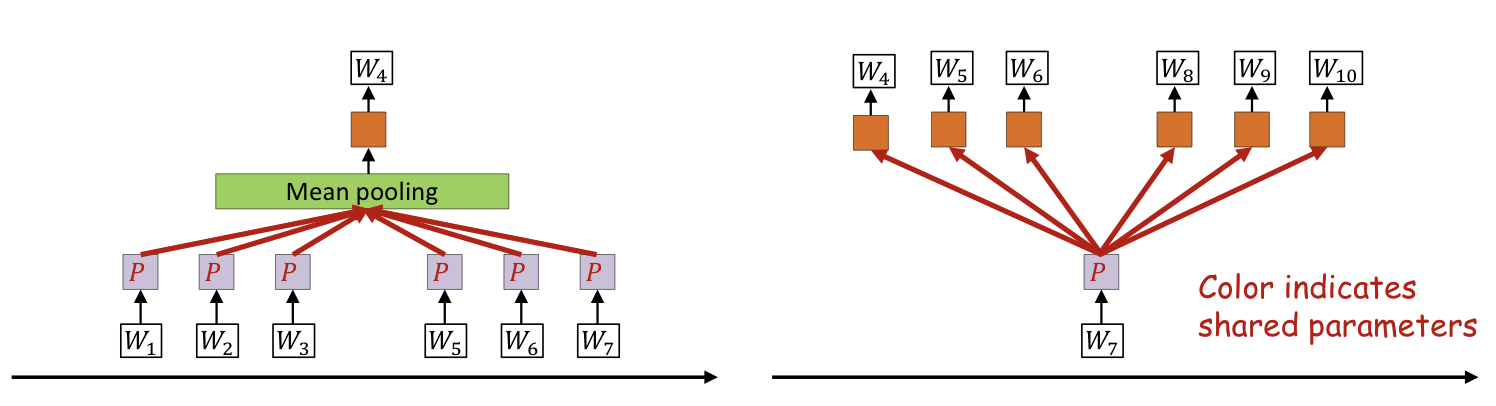

How to learn projections

- Soft bag of words

- Predict word based on words in immediate context

- Without considering specific position

- Skip-grams

- Predict adjacent words based on current word

- Soft bag of words

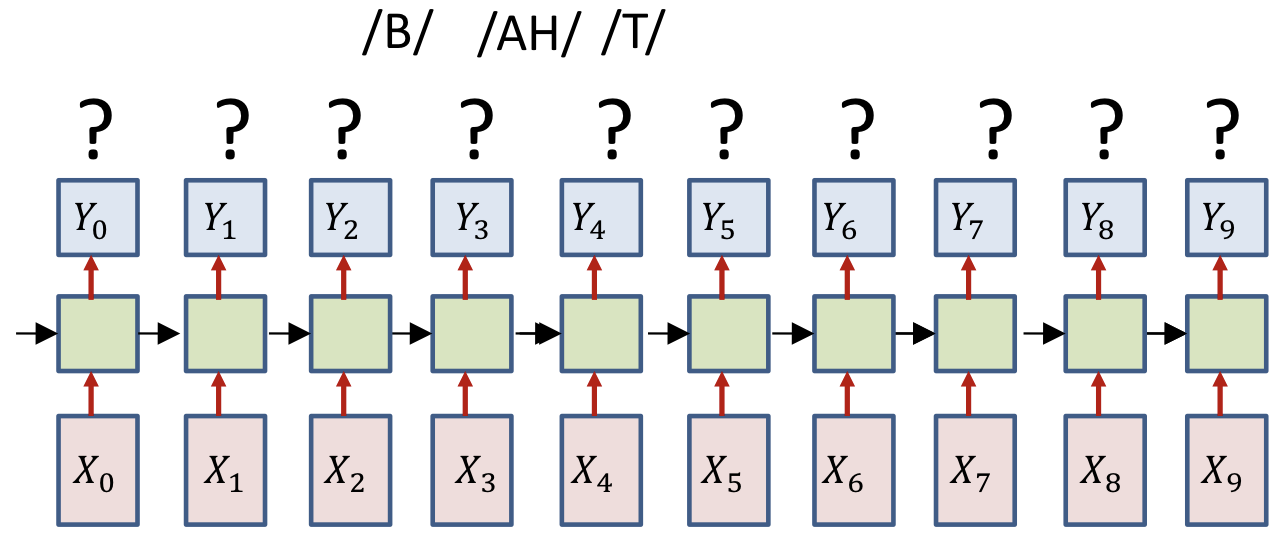

Many to one

- Example

- Question answering

- Input : Sequence of words

- Output: Answer at the end of the question

- Speech recognition

- Input : Sequence of feature vectors (e.g. Mel spectra)

- Output: Phoneme ID at the end of the sequence

- Question answering

Outputs are actually produced for every input

- We only read it at the end of the sequence

How to train

- Define the divergence everywhere

- Typical weighting scheme for speech

- All are equally important

- Problem like question answering

- Answer only expected after the question ends

- Define the divergence everywhere

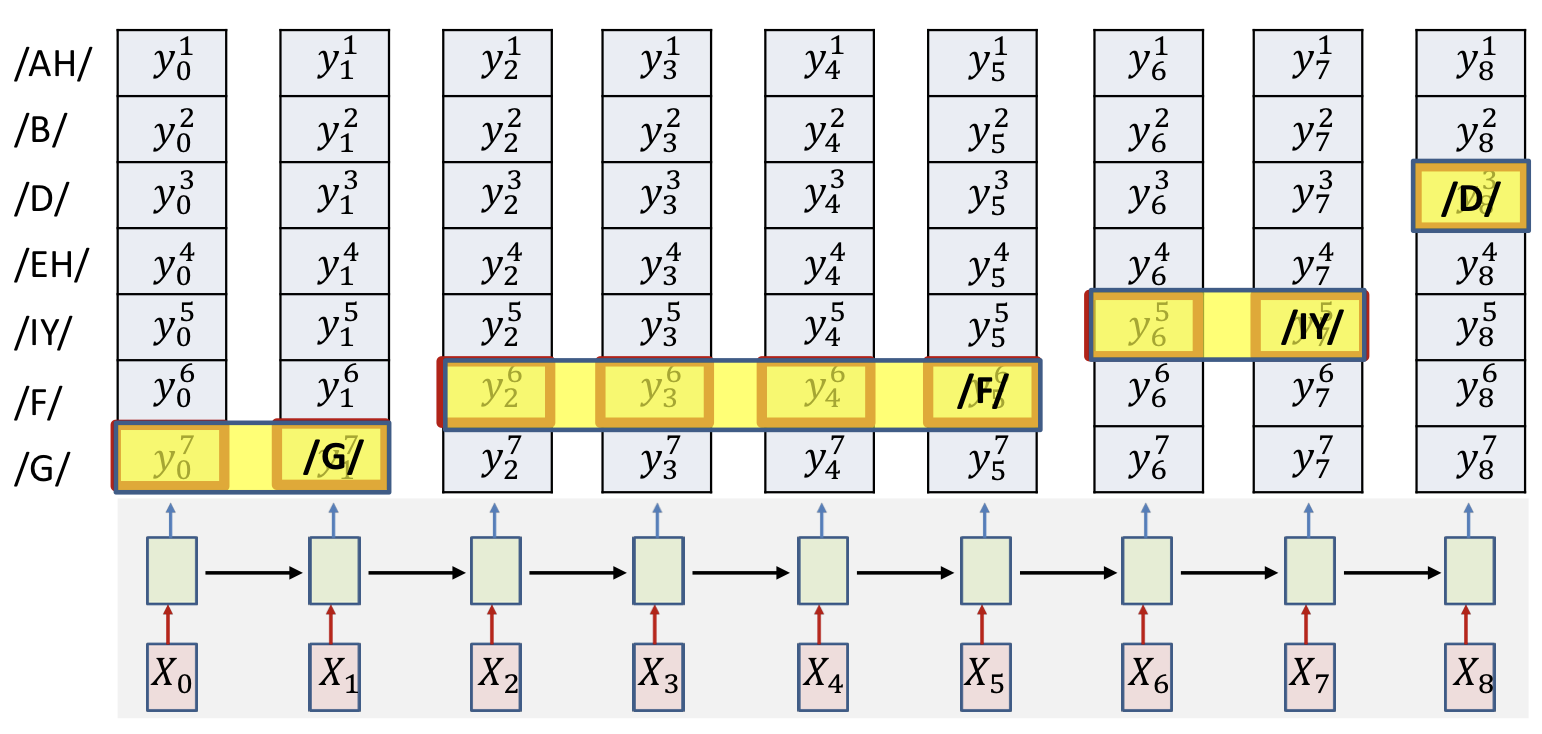

Sequence-to-sequence

- How do we know when to output symbols

- In fact, the network produces outputs at every time

- Which of these are the real outputs

- Outputs that represent the definitive occurrence of a symbol

- Option 1: Simply select the most probable symbol at each time

- Merge adjacent repeated symbols, and place the actual emission of the symbol in the final instant

- Cannot distinguish between an extended symbol and repetitions of the symbol

- Resulting sequence may be meaningless

- Option 2: Impose external constraints on what sequences are allowed

- Only allow sequences corresponding to dictionary words

- Sub-symbol units

- How to train when no timing information provided

- Only the sequence of output symbols is provided for the training data

- But no indication of which one occurs where

- How do we compute the divergence?

- And how do we compute its gradient